Counting service is a really fundamental service that has a lot of counting needs in business, such as likes, favorites, etc. It requires stable counting, meets long-term planning, and isolates from specific business needs.

Counting elements:

who

counting object id

counting object type

counting increment

create time

A single count is achieved by combining the above elements. Looking at it in the context of business, the counting needs are rough as follows:

Number of views for an article

Number of likes for a comment or answer

Number of purchases for a product

And so on.

enum ContentType {

ContentType_UNKNOW = 0;

ContentType_ARTICLE = 1;

ContentType_QUORA = 2;

ContentType_TOPIC = 3;

ContentType_WINELIST = 4;

ContentType_WINECOMMENT = 5;

ContentType_TIMELINE = 6;

}

enum CountingType {

CountingType_UNKNOW = 0;

CountingType_PRAISE = 1;

CountingType_LIKE = 2;

CountingType_COMMENT = 3;

CountingType_VIEW = 4;

CountingType_READ = 5;

CountingType_FORWORD = 6;

CountingType_SHARE = 7;

CountingType_DOWN = 8;

CountingType_REPORT = 9;

CountingType_BUY = 10;

CountingType_SALE = 11;

CountingType_TSASTE = 12;

CountingType_FAVORITE = 13;

}

In addition to these, there are also other needs, such as obtaining the recent count list, for example, the last 20 people who viewed an article.

Storage Structure

To meet the long-term requirements of the system, the storage structure needs to occupy as little space as possible and be highly efficient. Therefore, I have adopted a three-segment storage structure:

Segment 1: time_stamp & increment & counting_type

Segment 2: content_id & member_id

Segment 3: count

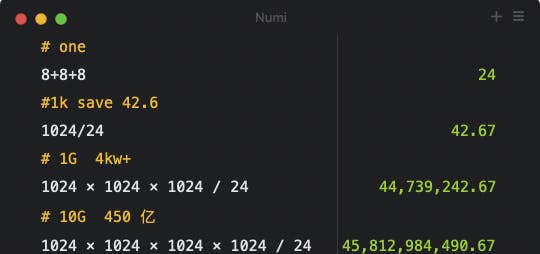

Three numbers constitute one counting operation, with each segment represented by an int64 data type. The theoretical size occupation is:

The theoretical storage size for this structure is such that 10GB of storage can hold up to 45 billion counting records, which is quite impressive.

2^8=256

2^16=65535

2^32=40 Billion

---

ONE:

+---+---------------------+------------------+--------------------+

| |counting_type id(16) | increment id(1) | timestamp(ms)41 |

+---+---------------------+------------------+--------------------+

TWO:

+-----------------------------+

| content_id(32) | creater(32)|

+-----------------------------+

THREE:

+-----------------------------+

| total (32) |

+-----------------------------+

query:

[ONE,TWO,THREE]: content_id00000000 >= content_id00000000 && content_id < (content_id+1)00000000

[[ONE,TWO,THREE],[ONE,TWO,THREE],[ONE,TWO,THREE]]

Data Structure

To add some fun to the code, I have chosen a team from a movie as the name for the data structure and assigned roles based on their character traits.

type (

MetaItem struct {

hOne *HuBayi

sTwo *Shirley

wThree WangKaiXuan

hsw []int64

H int64

S int64

W int64

}

// HuBayi: Team Core - The one in charge of every action: "Captain"

HuBayi struct {

time_stamp int64

increment bool

counting_type int64

}

// Shirley: Attentive and meticulous, responsible for intelligence work - "Spy" (or "Intelligence Officer") - records who the target is.

Shirley struct {

content_id int64

member_id int64

}

// WangKaiXuan: Greedy and responsible for managing assets and recording the total count - "Treasurer".

WangKaiXuan int64

)

About the order of these three segments, there is a small trick that will be revealed in the querying section later.

Data Structure Construction:

func NewMetaItem(h *HuBayi, s *Shirley, w WangKaiXuan) *MetaItem {

meta := &MetaItem{

hOne: h,

sTwo: s,

wThree: w,

}

meta.Setup()

return meta

}

func (this *MetaItem) Setup() {

this.H = this.hOne.Value()

this.S = this.sTwo.Value()

this.W = int64(this.wThree)

}

As an example, let's use the character "Hu Bayi":

/*

+---+---------------------+------------------+--------------------+

|0 |counting_type id(16) | increment id(1) | timestamp(ms)41 |

+---+--------------------+-------------------+--------------------+

*/

func (this *HuBayi) Value() int64 {

var incrementInt int64

if this.increment {

incrementInt = 1

} else {

incrementInt = 0

}

return this.counting_type<<CountingTypeShift |

incrementInt<<IncrmentShift |

(this.time_stamp - twepoch)

}

func (this *HuBayi) Parse(value int64) (int64, int64, int64) {

time_stamp := (value & MaxTimestamp) + twepoch

increment := (value >> IncrmentShift) & MaxIncrment

counting_type := (value >> CountingTypeShift) & MaxCountingType

return counting_type, increment, time_stamp

}

Scalability

16 bits are reserved for counting_type, which completely satisfies any future expansion requirements.

To achieve maximum scalability, all timestamps do not start from 1997, but rather from a recent time, theoretically allowing the system to operate for another 20 years.

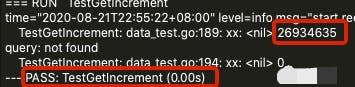

Querying

To query the total count, we only need to retrieve the last counting record, as Wang Kaixuan always records the final result after each counting operation. Here is an explanation of how to perform the query:

+---+---------------------+------------------+--------------------+

| |counting_type id(16) | increment id(1) | timestamp(ms)41 |

+---+--------------------+-------------------+--------------------+

TWO:

+-----------------------------+

| content_id(32) | creater(32)|

+-----------------------------+

As mentioned earlier, we can convert the query operation into a comparison operation, which is much more efficient than string-matching operations.

By sorting the counting records by timestamp and keeping track of the latest count for each content_id and counting_type, we can quickly obtain the total count for each counting_type. Then, we can simply sum up all the latest counts to get the total count.

Since the fields in the CountingRecord struct are all integers, we can use comparison operators to compare them, which is much faster than string-matching operations.

content_id_select := content_id << model.ContentIdIdShift

content_id_select_max := (content_id + 1) << model.ContentIdIdShift

err = this.withDBContext(func(c *mgo.Collection) error {

var err error

query := bson.M{}

query["s"] = bson.M{

"$gte": content_id_select,

"$lt": content_id_select_max,

}

err = c.Find(query).Sort("-_id").One(&it)

return err

}, this.dbSession,

getDBName(content_type),

getCollectionName(counting_type))

To find the desired record, we can use the content_id_select and content_id_select_max fields to define a range and then search for the maximum count within that range. Similarly, other query operations can be performed using this approach, such as finding the most recent likes list, whether a user has liked a certain content, the time of the like, and so on. Here is an example of how to find the maximum count within a range:

Sort the counting records by timestamp in descending order.

Iterate through the records and keep track of the latest count for each content_id and counting_type within the range defined by content_id_select and content_id_select_max.

Return the maximum count within the range.

This approach allows us to efficiently search for records within a specific range without the need to iterate through all the records, which would be much slower.

Database

That's correct. Since the system is designed using a general approach, it can be implemented on various databases including MongoDB, and the performance should not differ significantly.

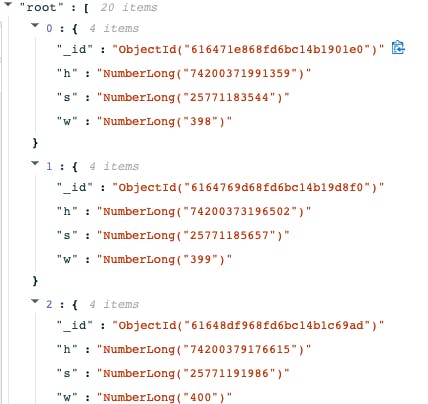

Index

it's a good idea to create an index on the "h", "s", and "id" fields since they are used in the queries to identify specific records. By creating an index, the database can quickly locate the relevant records and avoid scanning through the entire collection. This can significantly improve the performance of the queries.

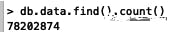

Performance

Real-time retrieval of billions of records in a single table

This system has low space consumption and high read/write performance, with a small amount of code. It has been running in production for many years.

End